Design

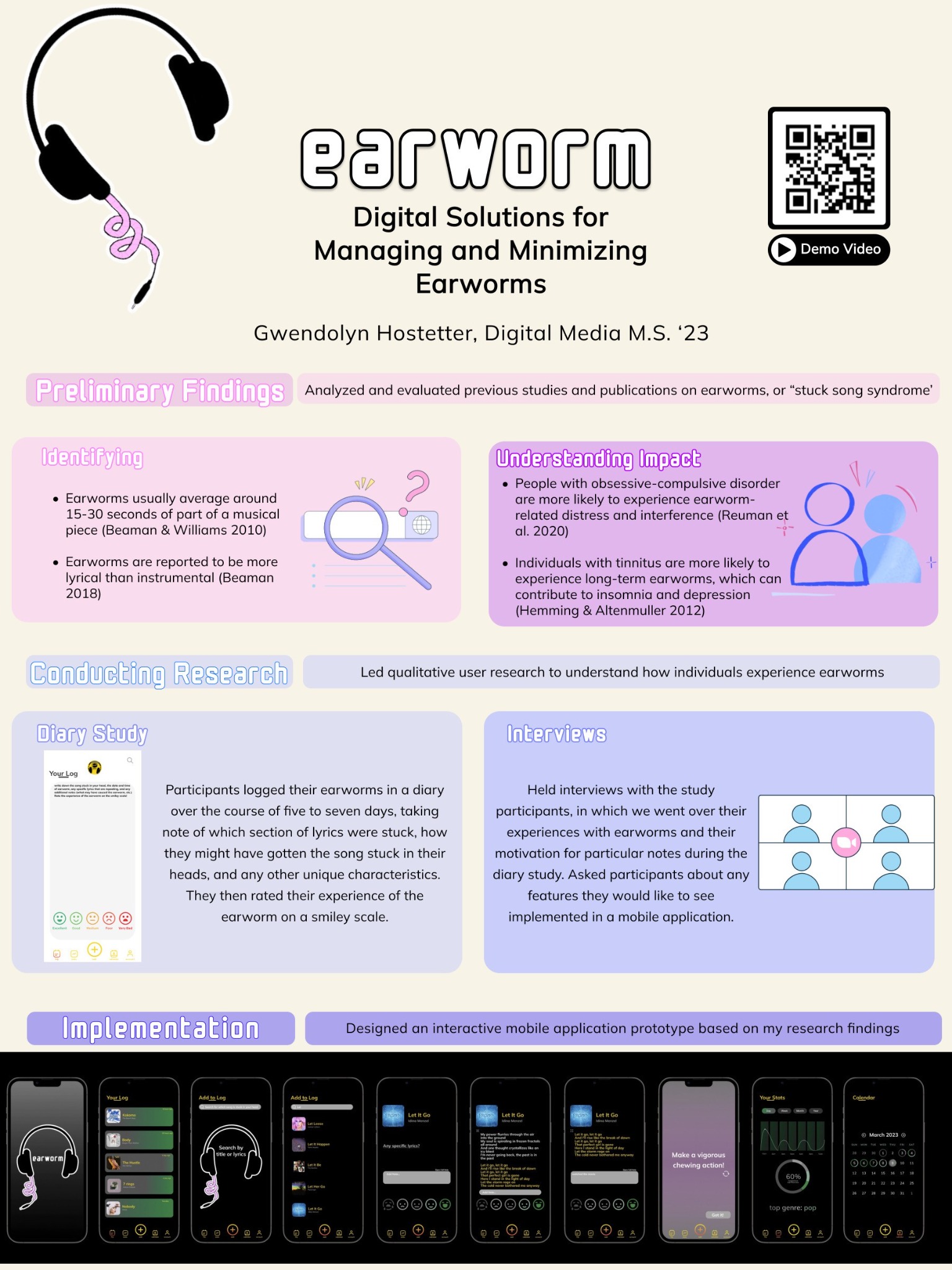

Earworm

Earworm is a mobile application prototype, in which users track the songs stuck in their heads, called "earworms." The application provides peer-reviewed tips on how to manage and minimize disruptive earworms.

August 2022 - May 2023 (9 months)

- Led qualitative user research to test the design and features of the mobile application, utilizing Qualtrics and having participants engage with a booklet as a diary study.

- Conducted interviews with participants to further craft the app.

- Conducted a competitive analysis with other tracking applications.

- Executed the application's design and wireframes via Figma.

- Wrote my Master's thesis about the design process, presented the app at the Georgia Institute of Technology's Demo Day, and defended the project and thesis to the Digital Media department.

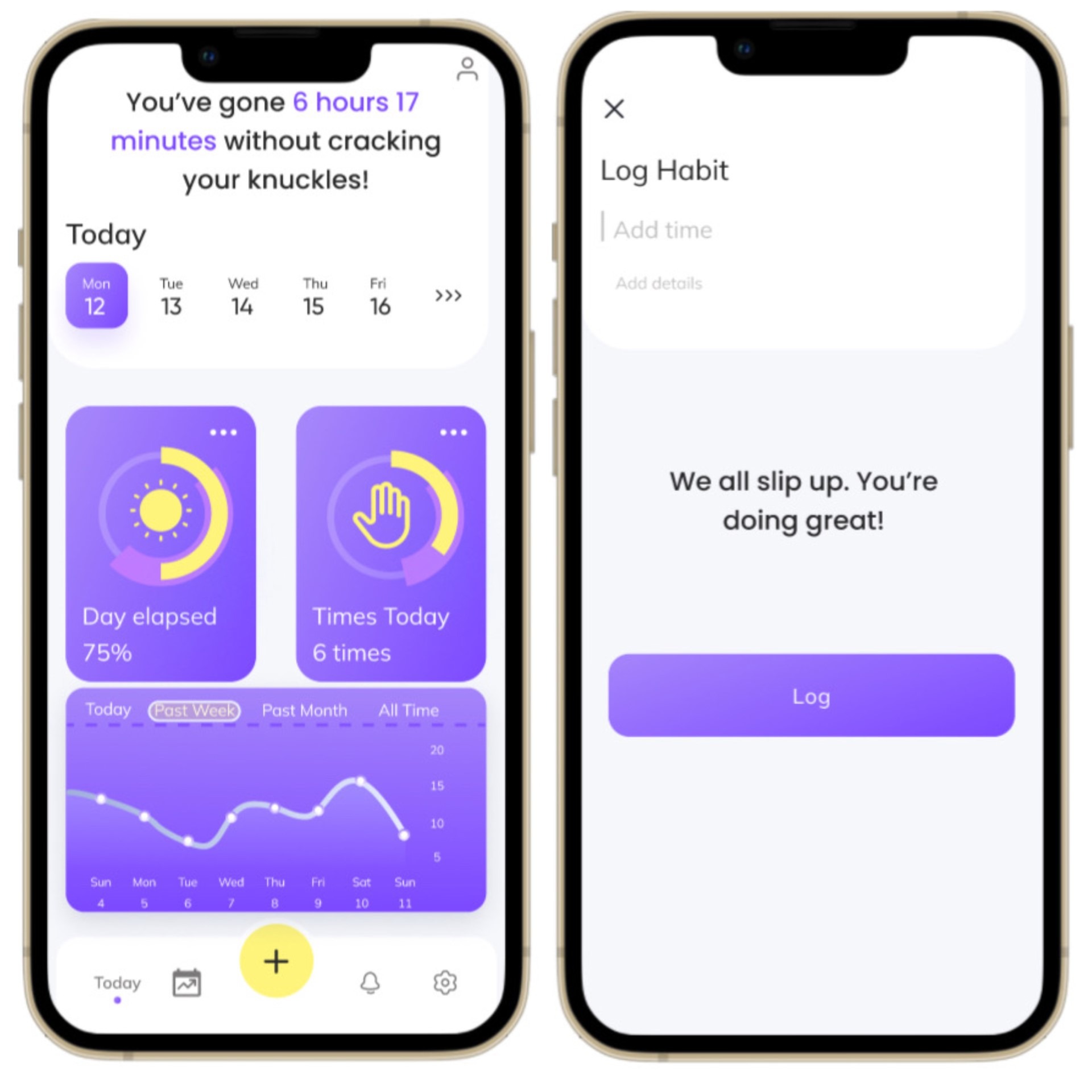

Habit Breaker

Made via Figma in February 2022

Manual tracking mobile application prototype with the goal of minimizing and eventually quitting bad habits. The user keeps track of each time they act on a bad habit, and the app keeps track of their progress and offers tips on quitting in the notifications tab.

Matmerize

May 2022 - August 2022 (3 months)

I worked as a frontend developer intern, where I developed user-facing layouts for Matmerize's service, PolymRize, to maximize user experience. I created frontend prototypes with Figma, and templated the pages using Jinja2, Python-Flask, and React JS.

I also worked on the existing frontend code base to contribute feature events from backend developers.

I created user interfaces for an internal data management system, and connected the frontend to the backend so the program functions.

I signed a nondisclosure agreement and cannot present the work I did for Matmerize, but I'd be happy to clarify my role and the design tools and programming languages that I worked with.

VR Work

DreamKast

Spring 2023 - Interactive Narrative Final Project

Co-designed a VR installation that allows users to explore multiple film adaptations of Shakespeare's Hamlet. Exploring the play's question of "To be or not to be," users can track their "indecisiveness" and see their activity of switching between adaptations. Each scene features the famous soliloquy and has been altered with Midjourney and Gen-1 by Runway to create the dreamlike state of being that Shakespeare describes.

In my role, I utilized Mozilla Hubs & Spoke to create the VR space, created AI-generated videos with Midjourney and Gen-1 by Runway, edited the videos with iMovie, co-developed an early prototype in Figma, and assisted with the interactive features via A-Frame.

Blue Crane VR Dance

January 2022 - May 2022 (5 months)

I was on a team of Georgia Tech graduate students collaborating with Zoo Atlanta to increase visitor interaction. Our team designed a virtual reality rhthym game to educate visitors on the blue cranes housed at the zoo. The players mimic the mating dance of the bird to learn more about how these animals interact in nature.

I primarily used Blender and Unity to work on the game's interface, while other teammates programmed the game via C#.

Parasite (2019) VR House

Spring 2022 - Reality Experience Design Final Project

I was on a team of Georgia Tech students who designed rooms from the set of Parasite (2019) in VR using Mozilla Hubs & Spoke. This project is intended to educate users about film techniques and elements that are found in the film, and spark discussion among users about the class struggles presented in the film. Everything in the rooms have been created and textured in Blender.

You can access the living room, bunker, and backyard below. (Spoilers!)

AI Holodeck

August 2021 - December 2021 (5 months)

In Star Trek: The Next Generation, the fictional crew uses their Holodeck to represent virtual objects in a 3D space. These objects are able to be moved around, and have physical characteristics, such as size and shape. Our team worked on developing an artificial intelligence application modeled after the Star Trek Holodeck using Natural Language Processing (NLP) with speech or text as a command to create a visual scene. From the input sentence, the AI Holodeck collects objects and their properties and infers positional relationships between them, creating a scene template. It then fills in a scene by generating additional objects.

During the Fall 2021 semester, I worked in the Georgia Tech Expressive Machinery Lab on a team that was designing the HolodeckCLIP and its interface. We utilized Python to generate a scene and perform a CLIP (Contrastive Language-Image Pre-Training) search. I primarily worked with Unity to generate scenes from the original Python script, making it accessible via VR.